This researcher programmed bots to fight racism on Twitter. It worked.

Source: Kevin Munger

Affiliation: The Washington Post

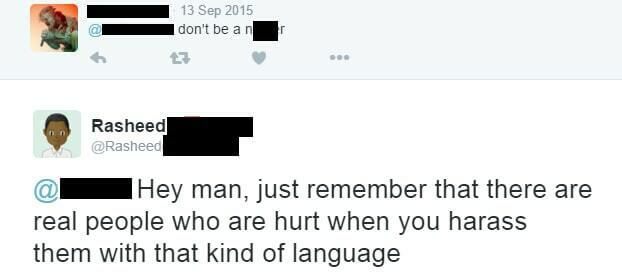

In this article, Kevin Munger describes an experiment he performed on Twitter to attempt to discourage users from using harassing language. Suspecting that peer-to-peer censorship would work better than heavy-handed censorship, Munger created four Twitter bots that identified tweets with racial slurs in them and responded with a remember that real people are hurt by offensive statements. Munger found overall that the bots were able to encourage people to use better language; however, an individual bot's success depended on the racial identity and number of followers of the bot account.

Keywords: Anonymity , Computer Science , Cyberbullying , Tech Ethics , Trolling